Yi Xiao personal websites

About me

I am a Ph.D. candidate in Computer Science and a research associate in Laboratory for Ubiquitous and Intelligent Sensing (UIS Lab) at Arizona State University since Fall 2021. I am honored to be advised by Dr. Asif Salekin.

I have extensive experience in multimodal learning, sensor-based computer vision, time-series analysis, large language models, and foundation models, with a focus on human-centered AI. My research centers on applied AI—developing robust, interpretable, and generalizable models for real-world sensing scenarios. I design novel frameworks to tackle challenges such as domain shift, data scarcity, and noisy multimodal inputs, with applications across wearable sensing, vision, audio, and language. I have published 8 papers in top computer science venues, including 3 as first author.Jump to Publications

Education

Ph.D. in Computer Science, Arizona State University, Tempe, AZ, USA (Expected 2026)

M.S. in Computer Science, Syracuse University, Syracuse, NY, USA (2021)

B.S. in Computer Science and Technology, Jilin University, Jilin, China (2019)

🎓 Selected Researches

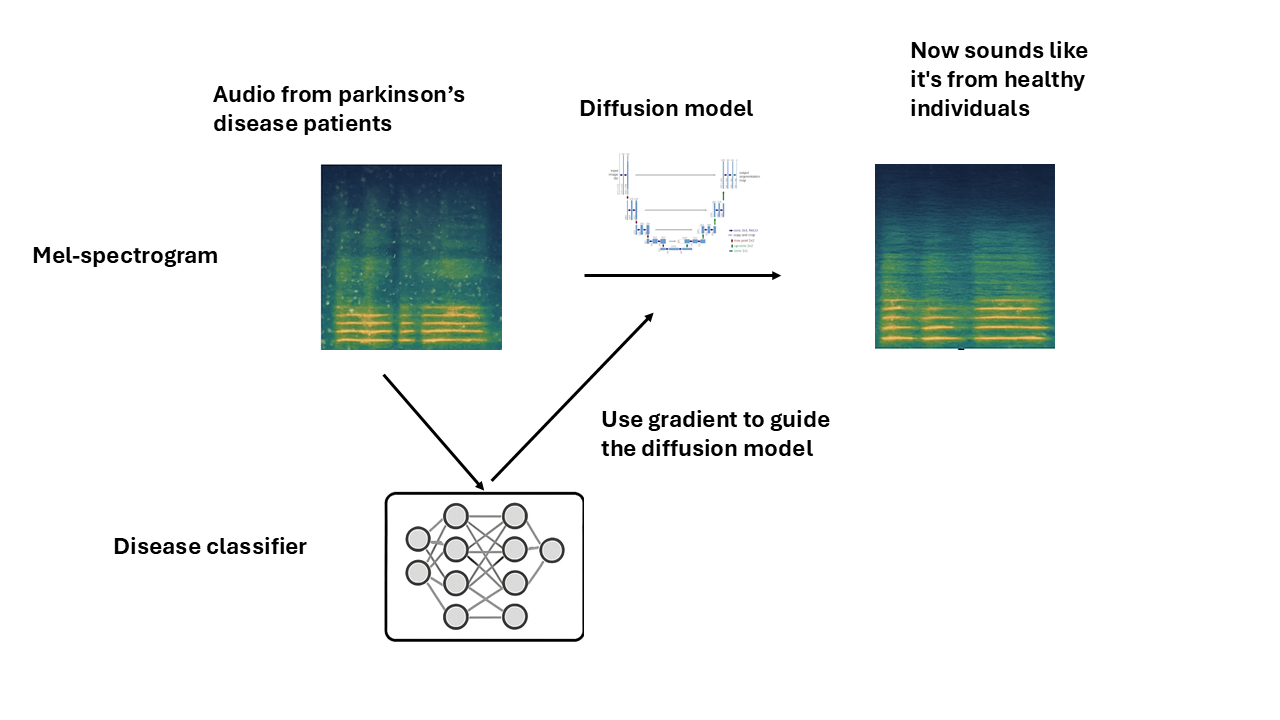

| Counterfactual Generation of Audio Mel-Spectrograms Using Diffusion Models Leverage classifier-generated gradients to guide the diffusion model in generating class-specific, realistic counterfactual mel-spectrograms, achieving high realism scores and enhanced interpretability. |

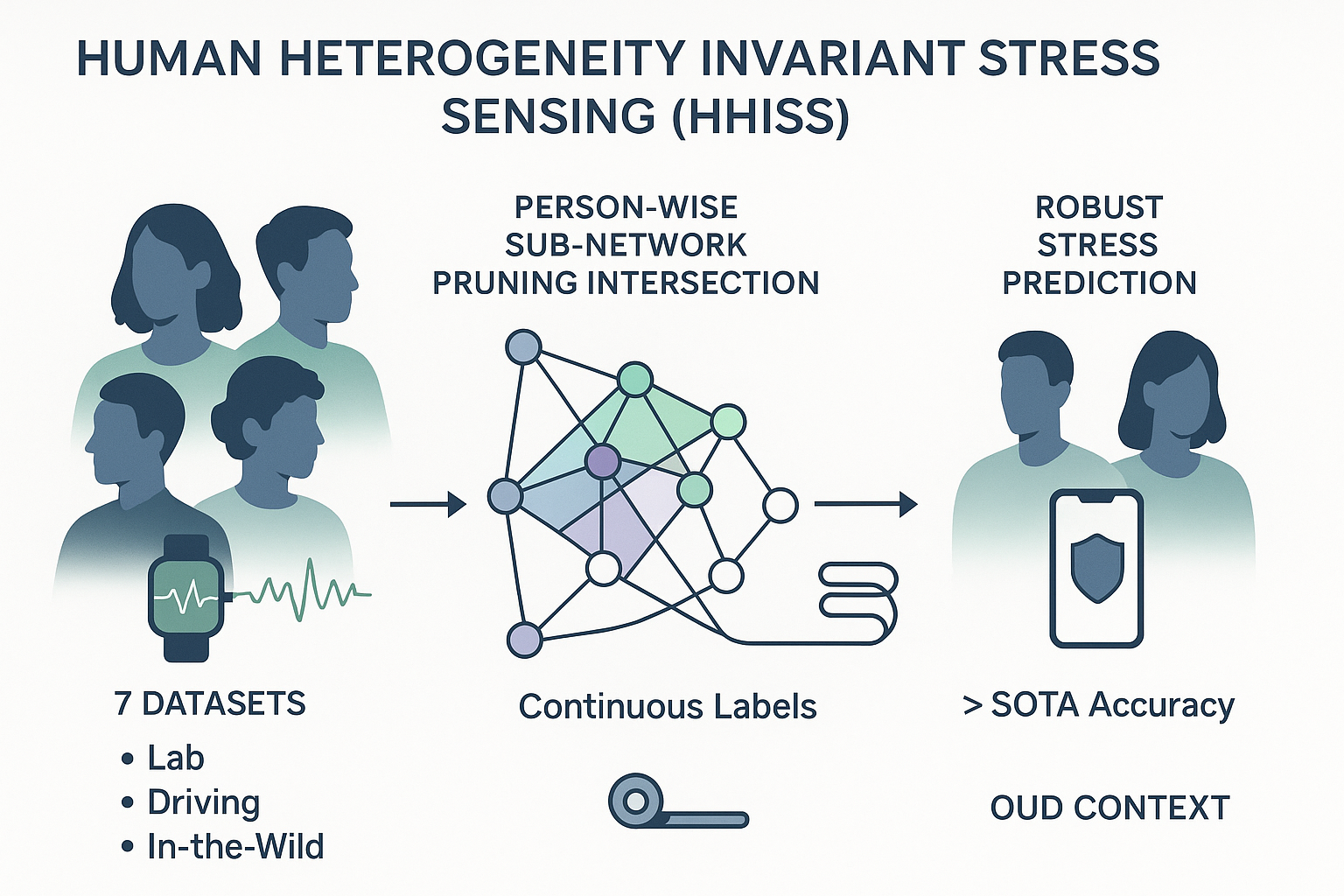

| Human Heterogeneity Invariant Stress Sensing. Proposed a novel pruning and quantization algorithm that significantly enhances AI model's generalizability, leading to an 7.8% improvement in stress detection accuracy in completely unseen environments. |

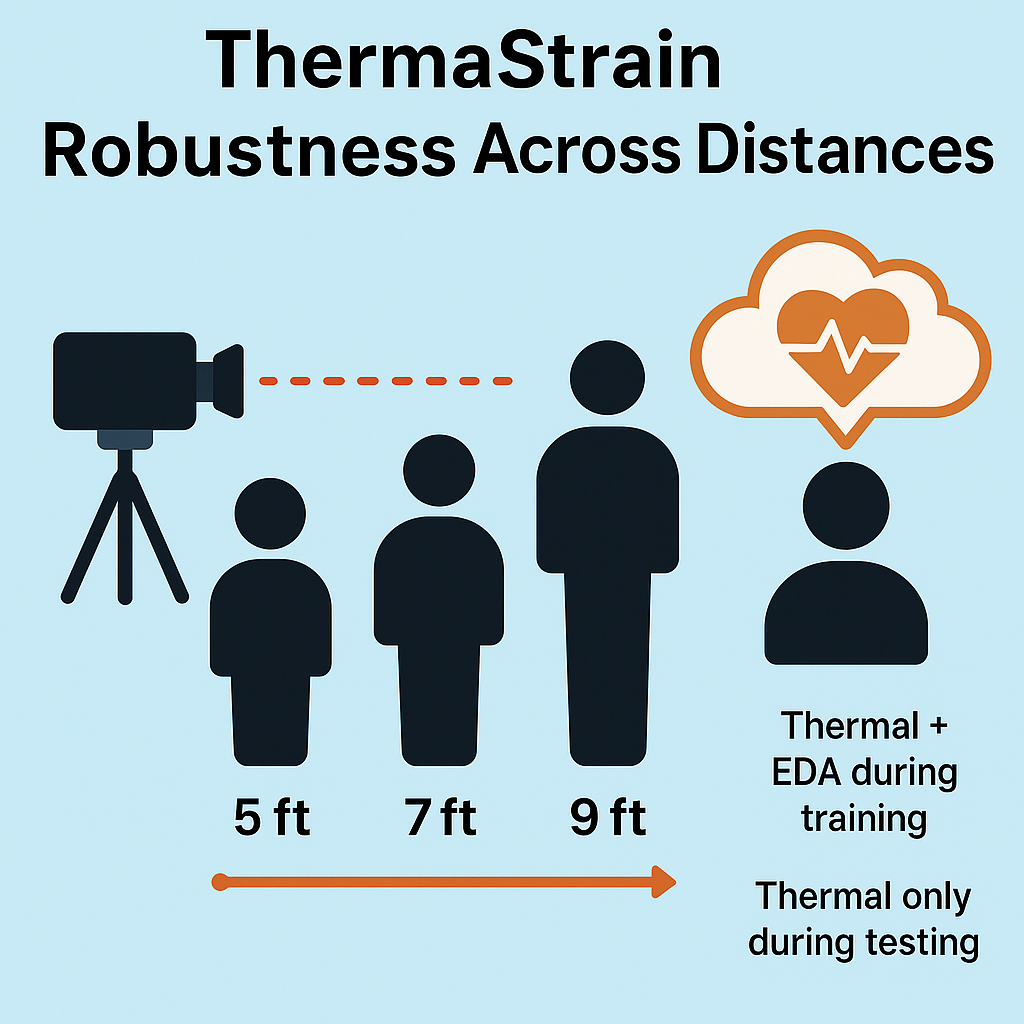

| Reading Between the Heat Training AI models with multimodal data (EDA + Thermal) using a shared backbone allows model to learn richer embeddings and more discriminative decision boundaries. This approach leads to over a 10% performance gain when deploying the thermal modality alone. |

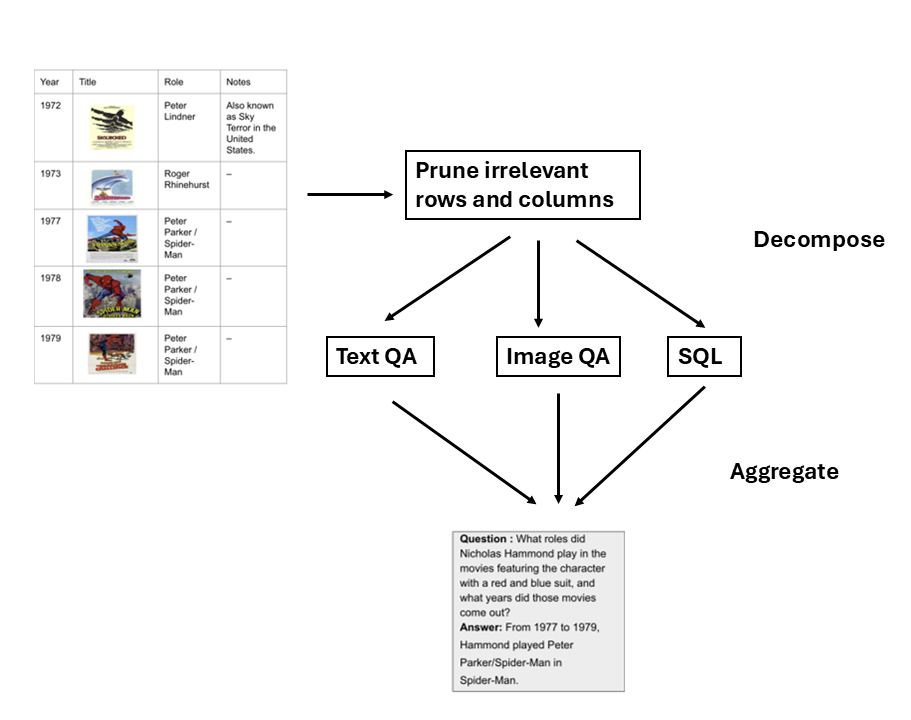

| Optimizing LLM Query Execution in semi-structured Table Question answering. By decomposing questions into image and table components and retrieving relevant data, improves the LLM's EM accuracy by 8.5% on the MultiModalQA dataset. |

Skills

- Programming Languages: Python, MATLAB, C/C++, Java, SQL,SPSS

- Tools & Frameworks: PyTorch, TensorFlow, NumPy, pandas, scikit-learn, Linux, Docker, Bash, AWS

- Deep Learning & ML: Transformers, Large Language Models (LLMs), Vision-Language Models, Foundation Models, Supervised Fine-Tuning, RLHF, Reinforcement Learning, Self-Supervised Learning, Neural Networks, Multimodal Learning, Statistical Learning

- Applications: Generative AI, Speech Recognition, Natural Language Processing (NLP), Computer Vision, Recommendation Systems, Question Answering, Time Series Forecasting, Face Detection, Clustering, Semantic Search, Sentiment Analysis

📰 News

- 2025-07: Our paper "Human Heterogeneity Invariant Stress Sensing" accepted at IMWUT/UbiComp 2025 🎉

- 2025-05: Glad to present our work on medical cyber-physical systems track at CPS-IoT Week 2025, Irvine, CA, USA.

- 2025-05: Our paper "Psychophysiology-aided Perceptually Fluent Speech Analysis of Children Who Stutter" accepted at ICCPS 2025.

- 2025-04: Our paper "CRoP: Context-wise Robust Static Human-Sensing Personalization" accepted at IMWUT/UbiComp 2025. 🎉

- 2024-07: Our paper on "VeriCompress: A Tool to Streamline the Synthesis of Verified Robust Compressed Neural Networks from Scratch" was accepted at the AAAI Conference on Artificial Intelligence (AAAI 2024).🎉

- 2024-10: My "Reading Between the Heat" paper was presented at UbiComp 2024, Melbourne, Australia.

- 2023-10: Our paper "Reading Between the Heat": Co-Teaching Body Thermal Signatures for Non-intrusive Stress Detection" accepted at IMWUT/UbiComp 2024 🎉

- 2023-10: Our paper "Classifying Rhoticity of/r/in Speech Sound Disorder using Age-and-Sex Normalized Formants" accepted at InterSpeech 2024 🎉

- 2023-07: Our paper "Privacy against real-time speech emotion detection via acoustic adversarial evasion of machine learning" accepted at IMWUT/UbiComp 2023 🎉

- 2022-07: Our paper "Psychophysiological arousal in young children who stutter: An interpretable ai approach." accepted at IMWUT/UbiComp 2022 🎉

Publications

Xiao, Y., Sharma, H., Kaur, S., Bergen-Cico, D., Salekin, A.

Human Heterogeneity Invariant Stress Sensing

Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, 2025Xiao, Y., Sharma, H., Tumanova, V., Salekin, A.

Psychophysiology-aided Perceptually Fluent Speech Analysis of Children Who Stutter

ACM/IEEE 16th International Conference on Cyber-Physical Systems (CPS-IoT Week 2025), 2025Xiao, Y., Sharma, H., Zhang, Z., Bergen-Cico, D., Rahman, T., Salekin, A.

Reading Between the Heat: Co-Teaching Body Thermal Signatures for Non-intrusive Stress Detection

Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, 2023Kaur, S., Xiao, Y., Salekin, A.

VeriCompress: A Tool to Streamline the Synthesis of Verified Robust Compressed Neural Networks from Scratch

AAAI Conference on Artificial Intelligence, 2024Testa, B., Xiao, Y., Sharma, H., Gump, A., Salekin, A.

Privacy against Real-Time Speech Emotion Detection via Acoustic Adversarial Evasion of Machine Learning

Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, 2023Sharma, H., Xiao, Y., Tumanova, V., Salekin, A.

Psychophysiological Arousal in Young Children Who Stutter: An Interpretable AI Approach

Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, 2022Kaur, S., Gump, A., Xiao, Y., Xin, J., Sharma, H., Benway, N. R., Preston, J. L., Salekin, A.

CRoP: Context-wise Robust Static Human-Sensing Personalization

Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, 2025Benway, N. R., Preston, J. L., Salekin, A., Xiao, Y., Sharma, H., McAllister, T.

Classifying Rhoticity of /r/ in Speech Sound Disorder using Age-and-Sex Normalized Formants

INTERSPEECH 2023, 2023